In this part of the Voxel Game Series we’re going to talk about logging. Logging is a vital part of any software project, and the same is true for a game engine. Logging allows you to quickly check the state of your systems, trace variables and more.

A quick history of logging

Logging exists as long as programming does[citation needed]. It just means to print some programmer-defined strings whenever a certain event happens:

void foo() {

std::cout << "Doing foo()";

try {

bar();

std::cout << "bar() succeeded! ♥\n";

} catch(const std::exception& e) {

std::cerr << "bar() messed up, the error is " << e.what() << "\n";

} catch(...) {

std::cerr << "bar() messed up, no idea what the error is 😒\n");

}

std::cout << "Done with foo()\n";

}

We can see that the programmer wants to see what’s happening inside foo(). In the “normal” case output goes to cout, in case of an error to

cerr.

Programmers quickly realized that while “printf-debugging” is a good idea (especially to figure out why your program crashed on someone else’s computer), it could benefit from some more structure. There are some pieces of information that are almost always important:

-

The severity of the message: Is it fatal, just an error, merely a warning, some piece of info or very specific debug information?

Commonly fatal is defined as an error that needs the program to abort when occuring (e.g., a

SEGFAULT). An error may be handled by first cleaning up (closing file handles, …) and then terminating the program. Warnings can be remediated by calling code or sometimes even ignored. Info is just for the programmer. Finally, debug is just for debugging purposes and shouldn’t be present in release builds. -

When did the log message occur? Timestamps, ideally with timezone.

-

Where did the log message occur? This often includes the process id, thread id, calling function, name of the source file and line in the source file.

-

Sometime we want to group or tag log messages. Common queries would be “All networking messages”, “what did the Event Manager do?”, and so on.

There are many logging libraries for different languages out there. log4j2,

logback and serilog are widely known and used. Chances are, the programming language of your choice

already has one or more logging facilities…

… except for C++, because the standard library really doesn’t have much.

As a side note: Due to the abundance of logging libraries and frameworks one common pattern programming languages use is to use a logging facade. The

idea is to provide a common API that pipes log message through to the actual implementation. Examples would be SLF4J,

Apache Commons Logging, Castle Windsor’s Logging

Facility or Rust’s log crate. It

is considered best practice for libraries to just use Logging Facades so the consuming application can choose an appropriate implementation.

At the time of writing, C++ doesn’t have a standard logging facade.

Choosing a logging library

While we could implement a simple logger ourselves, it’s tedious work and other people have probably done a better job anyways. So let’s see how we could find a library that fits our use case.

As true programmers™ the first thing we do is ask reddit. The library should be able to handle being embedded in a shared library1. It ideally should be compatible to existing frameworks, such that external tools like Apache Chainsaw2 or Logbert can be used.

The top answer spdlog was really annoying to use in combination with shared libraries. Since we’re going to be using boost anyway, we will just go

ahead and choose Boost.log.

Getting started with Boost.Log v2

When your first look at Boost.Log v2’s Documentation, it’s a lot. Let’s summarize the main concepts. If this summary is too short for you or you want more detail, have a look at the Design Overview.

Quoting my sources

Most of the ideas in the following section are not my own. Instead, I rely heavily on the aforementioned Design Overview.

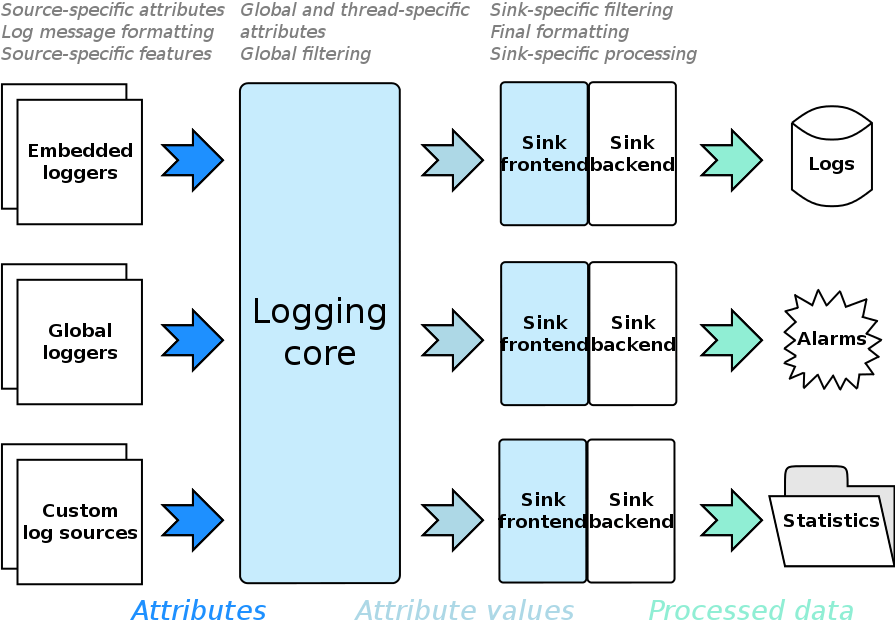

There are log sources. Log sources are entities that initiate logging by constructing a log record. Most of the time a log source is a logger.

Of course, you can develop own log sources (maybe to trace network traffic, capture a child application’s stderr, …), but for the moment, think of

log sources just as either global or embedded (owned by a class) loggers. In fact, for the remainder of the text I’m going to just call it logger.

Loggers pass on data that is associated with a log record to the logging core. We call all data associated with a log record attributes. Or, to be

more precise: Attributes are a means of getting this data, the attribute values. Think of an attribute like a function, and attribute values like

the function’s evaluation. For example, there could be an attribute timestamp with an attribute value of 2022-08-01 12:35:22.

Attributes are scoped into attribute sets: Global, thread-specific and source-specific. While the former two are self-explanatory, we should mention that source-specific attributes depend on the logger. Attributes with the same name are chosen on a priority-basis (global < thread < source). Furthermore, attributes can also be appended on a per-record basis.

Aren't attributes structured logging?

When reading about attributes, one might be reminded of structured logging. Structured logging means not logging strings, but instead machine-readable payloads. These can then later be consumed by data analytics tools and can be used to gain valuable insights into your users.

We will later discuss (in theory) how custom log sinks can be used for that purpose.

The logging core takes log records from the loggers, evaluates their attributes and begins to filter them. Examples of global filtering would include severity based filtering, or muting certain channels. Each record that survived the first filtering phase is filtered again, this time on a per-sink basis. Some attributes are only ever attached after filtering and can thus not be used while filtering.

So what exactly are sinks? Sinks take log records and store them somewhere. They might save them to a file, print them to cout, send them over the

network to ElasticSearch or just plain do nothing. Sinks can be formatting sinks. Formatting is the process of transforming a log record to

something else, like a DB record or a string.

Sinks consist of a frontend and a backend. Many frontends are provided by

Boost.Log and take on common tasks like synchronization,

filtering and formatting. A user can implement their own, but it’s not a common need. Backends should be implemented by the user and contain the logic

of actually dealing with the log messages. Boost.Log provides many

backends, for example text files, text streams (like

cout), (remote) syslog and Windows debugger.

Consuming the library

As mentioned in part 1, we’re going to use a package manager for our external libraries. We need to tell it which dependencies our program has. We do

this in the manifest file. NPM users might understand it as a package.json. In this file

we simply declare the dependencies and their versions.

To actually use such a file, add a new text document called vcpkg.json as a solution item. It should be placed in the src/ directory. Here are its

contents:

{

"$schema": "https://raw.githubusercontent.com/microsoft/vcpkg/master/scripts/vcpkg.schema.json",

"name": "voxel-game",

"version-string": "alpha",

"dependencies": [

"boost-log"

],

"builtin-baseline": "e07c62d05970d7d89e336bdb278b02242e8bda7c"

}

This pulls in Boost as a dependency with at least version 1.81.0, the newest one at the time of writing. In the proposed setup boost libraries are

linked as shared libraries.

Boost.Log can be either linked as a static or as a shared library. It is required that Boost.Log is linked as a shared library here, otherwise this code won’t work (you can’t access the logger from sandbox). See this StackOverflow answer for more information.

Gotcha! Boost.Log's core and its implementations

Internally, Boost.Log has many implementations for the logging core. They live in separate namespaces. The pattern is

<version><linkage>_<threading>_<system>. Version is always v2. Linkage is either “s” for static or empty for dynamic. Threading is either “st”

or “mt”. Finally, system specifies the target platform and configuration. Valid values are posix, nt5, nt6, or nt62. Examples would be

v2s_st or v2_mt_posix.

When consuming the library by including the headers we need to make sure that our client library uses the same <system> that vcpkg used. Right

now, it just works, but if you encounter errors this might be a source. It apparently is common enough to warrant its own FAQ entry in the

docs.

Let’s create a global logger

This blog post is just meant as a gentle introduction to logging. We thus only create ony global logger, and don’t go into the weeds of creating multiple loggers in different places (especially since we don’t have any other subsystem, yet). While this series progresses, we might need to create new loggers, in this cases consult the articles of the specific subsystems.

Here be dragons!

The C++ standard makes no guarantees concerning global static initialization order. Thus it is

dangerous to use a global logger for logging before and after main().

Let’s create two new files, logger.ixx and logger.cpp. You can think of them kind of like header and source file pairs. We declare the logger’s

public interface in the *.ixx and use the *.cpp to define some of the inner workings.

The library provides the macros BOOST_LOG_GLOBAL_LOGGER to declare and BOOST_LOG_GLOBAL_LOGGER_INIT to define a global logger.

module;

#include "../prologue.hpp"

#include <format>

#include <iostream>

#include <source_location>

#include <boost/log/common.hpp>

#include <boost/log/sources/global_logger_storage.hpp>

#include <boost/log/sources/severity_channel_logger.hpp>

export module engine.log;

namespace engine {

// This is a MSVC specific hack, in theory std::format_string should exist

export template <typename... Ts>

using format_string = std::_Basic_format_string<char, Ts...>;

BOOST_LOG_GLOBAL_LOGGER(

gLogger,

boost::log::sources::severity_logger_mt<boost::log::trivial::severity_level>)

ENGINE_API void _log(

boost::log::trivial::severity_level level,

std::string_view message,

const std::source_location& loc);

}

Ignoring the using statement for now (since it’s a MSVC-specific hack), the meat of the file lies in BOOST_LOG_GLOBAL_LOGGER. Here, we define a

new logger called gLogger of type severity_channel_logger_mt<severity_level>.

Boost.Log provides us with a variety of possible loggers, that I want to quickly summarize. A detailed list of Boost.Log

loggers is available. All loggers have a thread-safe counterpart

suffixed with _mt.

- A basic

logger: Can process log records. - A severity-aware

severity_logger. It can be customized over the type of the severity (i.e., which severity enum to use). In our code we take advantage of that and define our own enum. - A channel-aware

channel_logger. It allows to specify channels. It has a type parameter for the type of the channel which defaults tostd::string. - We can mix and match loggers, for example

severity_channel_logger. It provides supports for severities and channels.

We don’t export the logger from our library. Instead, we export a _log function (from the library, not the module!), that takes a severity level, a

message and a std::source_location. The latter is an object, that contains information

about the source code, such as a file name, the line number or function names. It essentially replaces the macros __FILE__ and __LINE__.

Initializing the global logger

Notice that we declared our logger, but didn’t initialize it yet. That’s what we’re going to do next:

BOOST_LOG_GLOBAL_LOGGER_INIT(

gLogger,

boost::log::sources::severity_logger_mt<boost::log::trivial::severity_level>) {

Just so we don’t go insane, let’s define some namespace aliases:

namespace expr = boost::log::expressions;

namespace attr = boost::log::attributes;

namespace sources = boost::log::sources;

namespace trivial = boost::log::trivial;

namespace sinks = boost::log::sinks;

namespace log = boost::log;

Then we define our actual logger and add a way to convert from our severity level enum to char.

sources::severity_logger_mt<trivial::severity_level> lg;

log::register_simple_formatter_factory<trivial::severity_level, char>("Severity");

For the logger to be useful, we want to add some attributes. We use

add_common_attributes() to add a log record counter and a time

stamp to all log records. Our global logger gets the attributes File, Line, Column and Function.

log::add_common_attributes();

lg.add_attribute("File", boost::log::attributes::mutable_constant<std::string>("<file unknown>"));

lg.add_attribute("Line", boost::log::attributes::mutable_constant<int>(0));

lg.add_attribute("Column", boost::log::attributes::mutable_constant<int>(0));

lg.add_attribute("Function", boost::log::attributes::mutable_constant<std::string>("<function unknown>"));

Next, we use one of Boost.Logs sink backends (the

text_ostream_backend),

and add std::clog as an output stream. In simple terms: We define a backend that outputs all messages

to std::clog.

const auto backend = boost::make_shared<sinks::text_ostream_backend>();

backend->add_stream(boost::shared_ptr<std::ostream>(&std::clog, boost::null_deleter()));

Last, we choose a sink

frontend. Sync frontends

perform things like record filtering, formatting and exception handling. Because we need to access our logger concurrently, we want access to our

backend to be synchronized. That’s why we’re choosing sinks::synchronous_sink.

using ConsoleSinkT = sinks::synchronous_sink<sinks::text_ostream_backend>;

const boost::shared_ptr<consoleSink_t> console_sink{ new consoleSink_t(backend) };

Now, all that’s left is to add the sink to the global logging core and return our finished logger.

log::core::get()->add_sink(console_sink);

return lg;

}

At this point we have a global logger. Let’s see how we actually log with it.

Implementing basic logging

Before, we defined our function _log. Let’s see its implementation now:

void _log(trivial::severity_level level, std::string_view message, const std::source_location& loc)

{

namespace logging = boost::log;

namespace attrs = boost::log::attributes;

auto& logger = gLogger::get();

logging::attribute_cast<attrs::mutable_constant<std::string>>(

logger.get_attributes()["File"]).set(loc.file_name());

logging::attribute_cast<attrs::mutable_constant<int>>(

logger.get_attributes()["Line"]).set(loc.line());

logging::attribute_cast<attrs::mutable_constant<int>>(

logger.get_attributes()["Column"]).set(loc.column());

logging::attribute_cast<attrs::mutable_constant<std::string>>(

logger.get_attributes()["Function"]).set(loc.function_name());

BOOST_LOG_SEV(logger, level)

<< message;

}

This function doesn’t hold many surprises: It loads a reference to the global logger and sets the file, line, column and function attributes with the

information from loc. It then uses the BOOST_LOG_SEV macro to log the message.

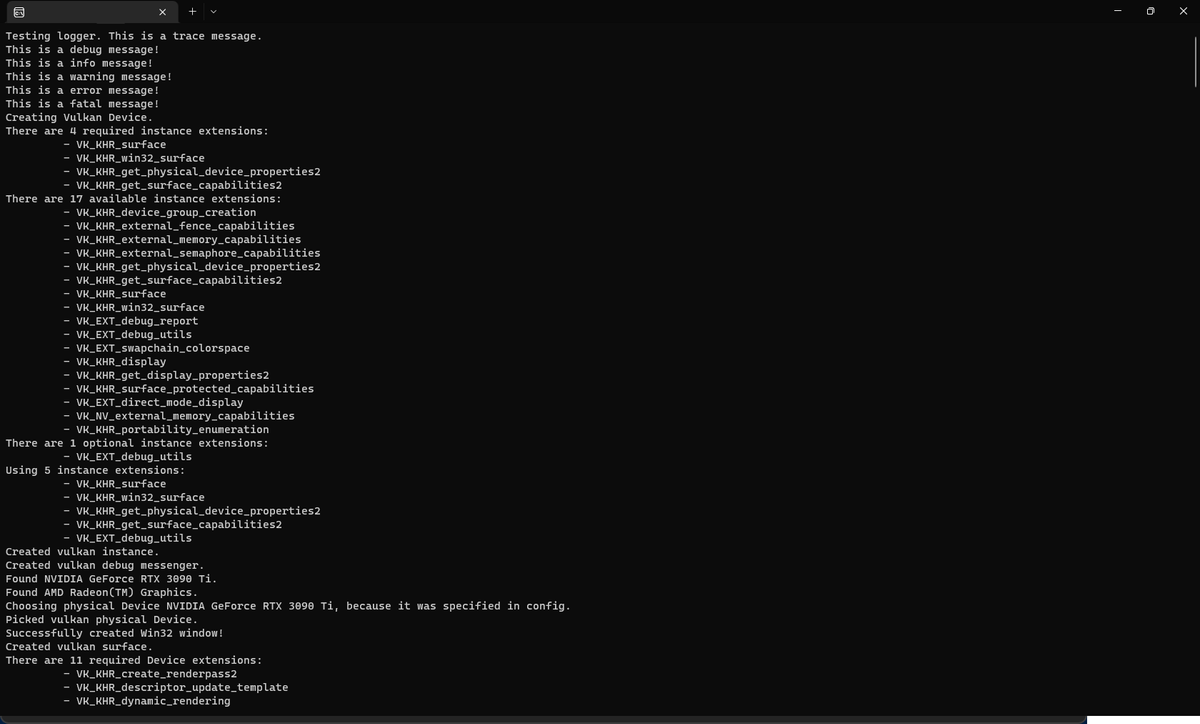

If we use the function _log we get some (not so nice output):

We use formatters to include crucial information

The reason why our output currently sucks, is that we haven’t told the library how to properly format our output. Let’s define some helpers first:

enum Color { kBlue, kCyan, kFaint, kGreen, kMagenta, kRed, kYellow, kSize};

auto coloring_expression(auto fmt, Color color)

{

namespace expr = boost::log::expressions;

return expr::wrap_formatter([fmt, color](boost::log::record_view const& rec, boost::log::formatting_ostream& stream) {

const static auto lookup = std::array{

"\033[34m",

"\033[36m",

"\033[2;90m",

"\033[32m",

"\033[35m",

"\033[31m",

"\033[33m",

};

static_assert(lookup.size() == kSize);

stream << lookup[color];

fmt(rec, stream);

stream << "\033[0;10m";

});

}

We define a function coloring_expression that returns a wrap_formatter that colors our log output with the colors defined in Color. We’re using

the ANSI escape codes to achieve this effect. Essentially, for each enum item, we have an entry in

lookup. We then insert the “print the characters in this color” code, then the message, and then reset the color.

Another useful formatter is one that truncates a string from the front. An implementation might look like this:

std::string_view last_n_backward(const boost::log::value_ref<std::string>& value_ref, size_t n)

{

if (value_ref) {

auto& string = value_ref.get();

if (string.size() <= n)

return string;

return { string.data() + string.size() - n, n };

} else

return "";

}

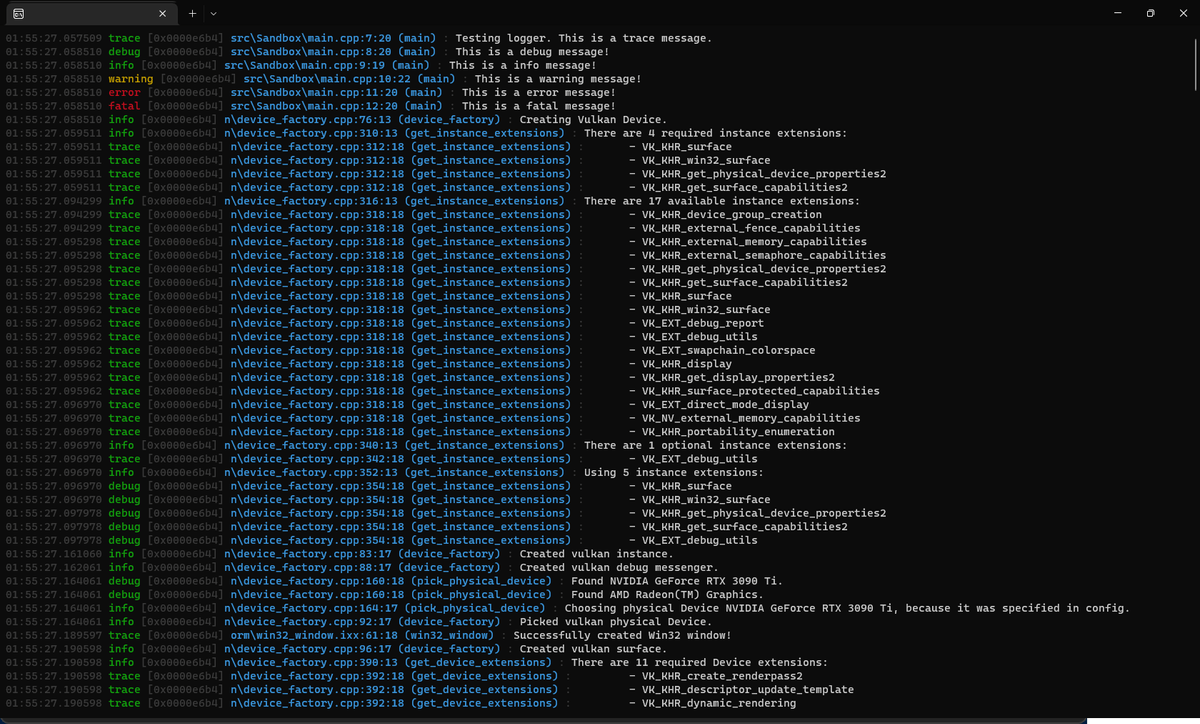

We want our output to look something like this:

We can use Boost.Log’s formatters, to achieve this:

console_sink->set_formatter(expr::stream

First, we set the console sink’s formatter. We’re going to use

the Boost.Log’s lambda style formatter. This begins with expr::stream, which starts a stream of expressions. Think of this whole thing as basically

an iostram on steroids.

<< coloring_expression(

expr::stream << expr::format_date_time<boost::posix_time::ptime>(

"TimeStamp", "%H:%M:%S.%f"

),

kFaint)

<< " "

The first item in the stream should be the date and time formatted with HH:MM:SS.f. For that we use our coloring_expression from earlier to wrap

format_date_time. This in turn uses the TimeStamp attribute that was defined with log::add_common_attributes(). We also add a space.

Now we use std:::setw to set the length of the next item to exactly 5. We also check if the

severity attribute (trivial::severity) is between trace and warning. In this case we print the severity in green. Else, we check if the severity is

a warning – which would imply a yellow color – otherwise we print the severity in red.

<< std::setw(5)

<< expr::if_(is_in_range(boost::log::trivial::severity, trivial::trace, trivial::warning))[

expr::stream << coloringExpression(expr::stream << trivial::severity, kGreen)

].else_[

expr::stream << expr::if_(

is_in_range(trivial::severity, trivial::warning, trivial::error))[

expr::stream << coloringExpression(expr::stream << trivial::severity, kYellow)

].else_[

expr::stream << coloringExpression(expr::stream << trivial::severity, kRed)

]

]

<< " "

Now we faintly want to print the thread id…

<< coloringExpression(

expr::stream

<< "["

<< expr::attr<attr::current_thread_id::value_type>("ThreadID")

<< "] ",

kFaint

)

… and the last 20 characters of our file name, the line in the file, the column and the whole function name.

<< coloringExpression(

expr::stream

<< boost::phoenix::bind(&last_n_backward, expr::attr<std::string>("File"), 20)

<< ':'

<< expr::attr<int>("Line")

<< ':'

<< expr::attr<int>("Column")

<< " ("

<< expr::attr<std::string>("Function")

<< ")",

kCyan) //

<< coloringExpression(expr::stream << " : ", kFaint)

Finally, we want to print the actual message and remove any newlines our clients might have tried to sneak in. In other words log("Foo\n") and

log("Foo") should look exactly the same.

<< expr::smessage

<< expr::auto_newline

);

Helper functions make a nice API

You might’ve noted that our API is pretty verbose. It would be nice if the user could just write log_warning("My log message, value={}", 12) and the

library did the heavy lifting. Luckily, C++20 introduced std::format, which allows us to

nicely format strings. We’re going to implement log_warning as an example here, the rest of the functions are left as an exercise to the reader.

std::format availability

Not all compilers support std::format. Even though it has entered the standard, if you’re on an older compiler this just might not work for you.

So we know that we need three things: The actual format string, the arguments and the source location. Put into code, this looks like this:

template<typename... Ts>

void log_info(

format_string<Ts...> fmt,

Ts&&... ts,

const std::source_location loc = std::source_location::current())

{

_log(trivial::info, std::format(fmt, std::forward<Ts>(ts)...), loc);

}

This takes a variable number of arguments Ts... and constructs a format string from them. All fine and dandy, right? Not quite. Because loc has a

default value, the compiler can’t be sure if std::source_location is part of the Ts... or not. This can be avoided by calling log_info with

explicit template arguments (e.g., log_info<int, float>("{}, {}", 1, 2.f)) but this doesn’t seem really user friendly.

Instead, we (ab)use deduction guides to help the compiler out. types:

export template <typename... Ts> struct log_info {

log_info(

format_string<Ts...> fmt,

Ts&&... ts,

const std::source_location& loc = std::source_location::current()

)

{

_log(trivial::info, std::format(fmt, std::forward<Ts>(ts)...), loc);

}

};

export template <typename... Ts> log_info(format_string<Ts...> fmt, Ts&&...) -> log_info<Ts...>;

export template <typename... Ts> log_info(const char* fmt, Ts&&...) -> log_info<Ts...>;

We transformed log_info into a struct (so we can use deduction guides) and put the _log call in the constructor.

Wait what?

If you have no clue what’s going on: Don’t worry, it’s not too important. Essentially, starting in C++17 we can tell the compiler how to deduce the

types of class templates (similar to how it works with function templates). This is done with deduction guides that basically tell the compiler

where to get the types from. An example use case might be std::array, where you usually don’t have to explicitly specify the type or the size.

A quick discussion of ENGINE_API and export

Keen observers might have noticed that we’re not exporting _log from our module, but we’re exporting it from our DLL. Conversely, we’re exporting

log_info from our module, but not from our DLL.

The reason is simple: templates are instantiated when they’re used. This means that log_info<int, int, int> is different from log_info<float>.

That’s why we can’t export it from a DLL: It’s not a single type that can be exported. We need it to be accessible from the outside though, so that’s

why we make it visible (i.e., export it) from the module.

Internally, log_info uses _log which is a simple function. If log_info is not instantiated in the DLL, it needs to still be able to find _log

to work. That’s why we export it from the DLL. Since it is a library internal function, we don’t need to make it visible to other modules.

Summary

In this article, we implemented logging. We can use it in our application like this:

import engine.log;

int main(int, char**) {

int foo = 2;

float bar = 3.f;

engine::log_info("foo={} and bar={}", foo, bar);

return 0;

}

In the next article, we’re going to implement a simple resource management system.