One of the first tasks in our game engine will be to actually draw something on the screen. We call this process rendering. In this initial article we’re going to take a look at the high-level architecture we’re going to build. In the next articles in the series we’re actually getting started building it.

The moving parts of 3D rendering

Let’s start from the beginning. In this context, rendering is the process of converting 3D models into 2D images. Typically, we represent our models as a list of triangles. Each model (or even each vertex in a model) has different properties: A glass cylinder will look different than a solid donut which in turn looks different than the moon. A material defines one set of properties, such as opacity, reflection, light emissivity, and color.

APIs let you programmatically control the GPU

On the other part of the spectrum we have GPUs. They magically take our models and transform them into 2D images on our screen. There are many different GPU vendors (the major two being Nvidia and AMD, of course). In the early days of 3D rendering, a game developer would need to write specialized rendering code for each different GPU1.

This is where APIs come in. They define an abstraction over the physical hardware and allow a programmer to address all GPUs (that support this particular API). There are three well known Graphics APIs:

-

DirectX was invented by Microsoft and is in use on all devices running Windows as well as XBox consoles.

There are compatibility wrappers for Linux, but they aren’t great from a performance point of view.It has gone through major revisions. DirectX 11 and lower are considered high-level APIs (more on that later), while the newest installment, DirectX 12, is a low-level API.Linux driver in the works

While researching for this blog post I found out that Microsoft is apparently working on providing a DirectX driver for Linux and even getting it mainlined. There are a lot of caveats, but it’s still interesting to note. Here’s the relevant blog article.

-

OpenGL (Open Graphics Library) is an API that was first standardized in 1992. It runs virtually anywhere (Windows, Linux, MacOS) as well as on mobile devices and probably your toaster. It has gone through many major revisions and supports a wide variety of use cases (not only Gaming, but also CAD software or even heads-up displays in cars). It is considered a high-level API.

-

Vulkan is a newer API and was designed as a successor to OpenGL. The main difference is that OpenGL is considered a more high-level API (since most state management is done by the driver), whereas Vulkan requires its programmers to specify everything explicitly. Vulkan was poised to replace OpenGL’s status as the platform-agnostic API, however Apple in their infinite wisdom chose to not implement it on MacOS and create their own API, Metal, instead2.

Interestingly, the Nintendo Switch supports Vulkan.

-

Other systems have their own APIs: The PlayStation 4 uses Gnm and Gnmx, two proprietary APIs. While the Switch supports Vulkan, Nvidia also built it’s own API for the Nintendo Switch.

So what’s the difference between high-level and low-level APIs? It all boils down to the level of control the programmer has over the hardware. Older APIs (like OpenGL and DirectX 11) abstract away a lot of the underlying hardware but in turn provide a more convenient interface. Things that take five lines with the old APIs might as well take hundreds of lines with the new APIs. However, because of the detailed control over every aspect of the GPU (including synchronization and memory allocations), these APIs can be much faster if used correctly. A good analogy3 might be assembly language and C++. Yes, code in assembly can be orders of magnitude faster, but only if people know what they’re doing. It is not going to be faster just because it is written in assembly.

Another aspect to this discussion is the issue of multithreading: Most of OpenGL’s state is global and multithreading can become really challenging.

In our engine we’re going to use Vulkan.

The engine’s low-level renderer abstracts APIs

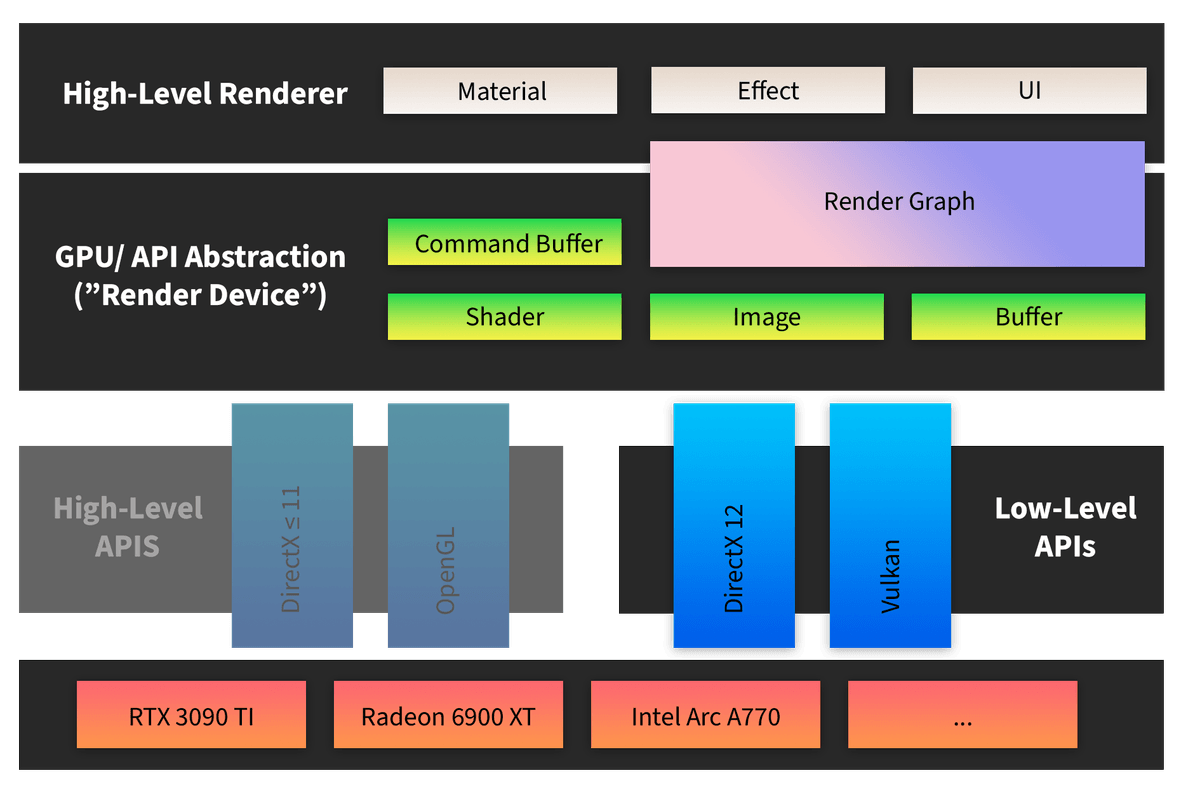

In our engine, we’re going to split rendering into two distinct components: A low-level renderer (the “render device”) and a high-level renderer.

Our low-level renderer abstracts away all API specific things. Allocating memory on the GPU is different in DirectX 12 and Vulkan, but it should work exactly the same in our low-level renderer:

// We have interfaces like these...

class AbstractDeviceAllocation;

class AbstractRenderDevice {

virtual AbstractDeviceAllocation& allocate() = 0;

};

// ... and implementations like these:

class VulkanDeviceAllocation : public AbstractDeviceAllocation{};

class Dx12DeviceAllocation : public AbstractDeviceAllocation{};

class VulkanRenderDevice : public AbstractRenderDevice {

VulkanDeviceAllocation& allocate() override;

}

class Dx12RenderDevice : public AbstractRenderDevice {

Dx12DeviceAllocation& allocate() override;

}

We can then use a factory pattern, to switch rendering APIs at runtime:

std::unique_ptr<AbstractRenderDevice> device_factory(std::string_view implementation) {

// Build render device based on param

}

int main(int, char**) {

auto device = device_factory("vulkan");

auto allocation = device->allocate();

// ...

}

Our low-level renderer will contain these building blocks4:

- Images and Samplers. We’re going to need images in GPU memory (i.e. textures). There needs to be a way to upload them to the graphics card and release them once they’re no longer needed.

- Shaders. Shaders are small programs running on the GPU. They’re responsible for converting 3D geometry to the final 2D image on the screen. We’re going to have a few articles dedicated to them.

- Buffers. Buffers are basically just memory on the GPU. They can contain arbitrary data, but most often just contain geometry.

- Command Buffers. Command Buffers are a list of instructions to be executed on the GPU in order to present something.

auto cmd = device->request_command_buffer(); cmd.begin_drawing(); cmd.use_texture("bricks.png"); cmd.draw_vertices(vertex_list_1); cmd.use_texture("dirt.png"); cmd.draw_vertices(vertex_list_2); cmd.flush_to_screen(); cmd.end_drawing(); - Synchronization Primitives like fences, events and barriers allow you to exploit the GPU’s inherent parallelism. You can wait for one shader to have completed before running another shader and so on5.

- Render passes are sequences of operations that perform a specific rendering task (like drawing geometry or calculating lights). They can often be run concurrently.

The high-level renderer translates from our game to the low-level renderer

The other component that was mentioned above was the high-level renderer. It translates from our game world to objects the low-level renderer can deal with. That way, we can implement one specific effect in terms of abstractions from the low-level renderer (and thus make them API agnostic).

We would expect our high-level renderer to have functions like draw_chunk() or draw_npcs(), while our low-level renderer will have functions like

draw_vertices(). It is the high-level renderer’s job to figure out how to actually “draw” a chunk.

Terms we will often hear in this context are:

- Materials describe various properties of objects. Color, Texture, Translucency and Emissivity would come to mind.

- UI (User Interface) are controls like text input, scroll bars and checkboxes the user can interact with.

- Effects can be things like the waving of grass or particle systems.

A render graph bridges the gap between high-level and low-level render

Our engine is going to take advantage of a Render Graph (also known as a Frame Graph). The idea is based on the GDC Presentation “Frame Graph: Extensible Rendering Architecture in Frostbite”.

It boils down to this: Scenes require many render passes. Render passes can depend on one-another (e.g., when they first write to and then read from an image resource). We can see each such render pass as a node in a graph. The edges of this graph are the dependencies. We can see that we have an acyclic directed graph.

We can do a topological sort of this graph. This means for every edge uv, the vertex u comes

before the vertex v in the graph. We can take this information to

- Run all render passes with no dependencies between each other concurrently,

- Cull all render passes whose results are never used (transitively!),

- Insert synchronization points exactly where they are needed, thus getting an optimal order for our rendering and making the most of our GPU resources,

- alias resources that aren’t needed anymore. In other words: If two render passes need an image that is 1920px by 1080px, and guarantee that they’re going to overwrite the image, we can reuse the same memory location (if we know that they don’t run concurrently).

Summary

In this article we have discussed the various components involved in 3D rendering in our engine. We talked about high-level and low-level APIs and decided to use Vulkan for the forseeable future.

We also discussed that we’re going to have a low-level rendering component that is responsible for abstracting away from graphics APIs, and a high-level component that knows how draw objects from our game world in terms of our low-level component.

Lastly, we talked about render graphs, a way to optimize rendering with many passes.

In the next article, we’re going to get started with our Vulkan low-level renderer.

Footnotes

-

Some people in the embedded sector still build their own graphics drivers depending on the board they’re working with. ↩

-

There are workarounds, it’s still annoying that Apple always have to have their own way of doing things. ↩

-

That Chat GPT came up with, interestingly enough. Honestly, I should let it write my blog posts. ↩

-

This list is still a work in progress, since the renderer isn’t finished yet. ↩

-

High-level APIs would often try to figure synchronization out by themselves. This would often mean that your game could have atrocious performance on one specific driver, just because you were doing things in an order the driver’s authors didn’t expect. ↩